Quadruped Pupper

Spring 2022

CS Independent Study + Stanford Robotics Team

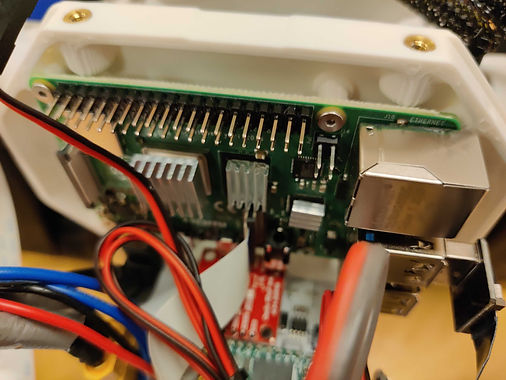

We developed Pupper, a quadruped robot, to help K-12 and undergraduate students get involved in robotics research. The code is open source and inspired from Spot at Boston Dynamics.

Through this independent study, we fine-tuned Pupper's

motor control, explored forward and inverse kinematics, system identification, and embodied-AI concepts including reinforcement learning and simulation. We built a pair of teleoperated arms with haptic feedback, programmed a robot arm to learn to move by itself, optimized a trotting gait, and taught Pupper how to follow the commands of a human using computer vision to detect poses on the OpenCV OakD AI Kit.

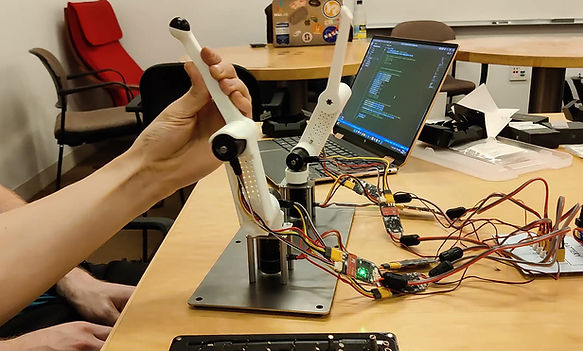

We built two robot arms that mirror each other’s motion. By affecting the leader robot arm, the follower robot reads encoder values and through inverse kinematics, uses kinematic equations to determine the motion of a robot to reach the exact same position. By incorporating a force multiplier on the follower robot and haptic feedback, you get a surgical robot!

In another iteration, we made our robot arms match each other's end-effector positions by adding inverse kinematics to affect joint angle commands. We tested in simulation before deploying on the robot. The steps to achieve this are:

-

Calculate the cartesian end-effector position of the right arm using forward kinematics.

-

Use this result to calculate the cartesian position of the right arm’s end-effector relative to the base of the left arm.

-

Disable the right arm’s torque by de-activating the motors in the right arm.

-

Deploy to robot and check the reported position.

-

Figure out what to add/subtract from the right arm’s position to get the corresponding position relative to the left arm.

-

Deploy to robot and check that the position relative to the left arm is correct.

-

Use inverse kinematics to move the left arm to this position in the simulator.

A reinforcement learning experiment: can we train a robot arm to kick a cube as far as possible? Our policy rewarded distance travelled, but we could have definitely improved by accounting for realistic inertia values or punishing excessive energy expenditure.

By modifying single body pose tracking models on DepthAI hardware, we were able to get Pupper to follow a human based on the direction I pointed!