Shape Lab Data Visualization Research

Present

The Stanford SHAPE Lab explores how we can interact with digital information in a more physical and tangible way. Towards our goal of more human centered computing, we believe that interaction must be grounded in the physical world and leverage our innate abilities for spatial cognition and dexterous manipulation with our hands.

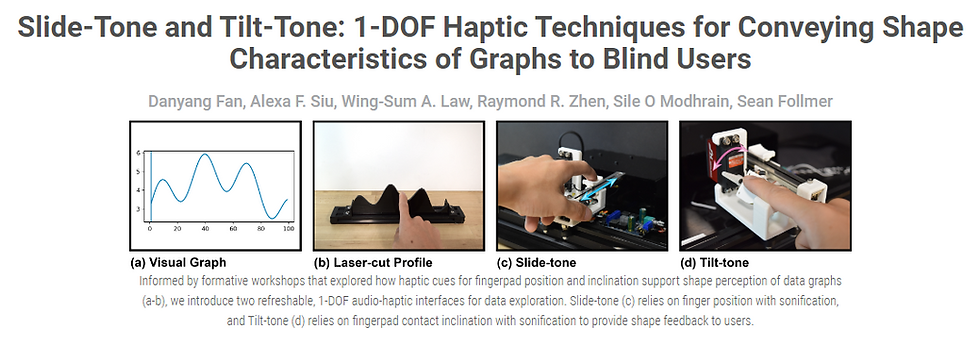

Video: https://shape.stanford.edu/research/SlideToneTiltTone/

Publication: https://dl.acm.org/doi/10.1145/3491102.3517790

Abstract: We increasingly rely on up-to-date, data-driven graphs to understand our environments and make informed decisions. However, many of the methods blind and visually impaired users (BVI) rely on to access data-driven information do not convey important shape-characteristics of graphs, are not refreshable, or are prohibitively expensive. To address these limitations, we introduce two refreshable, 1-DOF audio-haptic interfaces based on haptic cues fundamental to object shape perception. Slide-tone uses finger position with sonification, and Tilt-tone uses fingerpad contact inclination with sonification to provide shape feedback to users. Through formative design workshops (n = 3) and controlled evaluations (n = 8), we found that BVI participants appreciated the additional shape information, versatility, and reinforced understanding these interfaces provide; and that task accuracy was comparable to using interactive tactile graphics or sonification alone. Our research offers insight into the benefits, limitations, and considerations for adopting these haptic cues into a data visualization context.

Roller Robots for Haptic Contours

Present

Adapting this device into a 3D topological display.

Summer 2021

Stanford Mechanical Engineering Undergraduate Research Institute

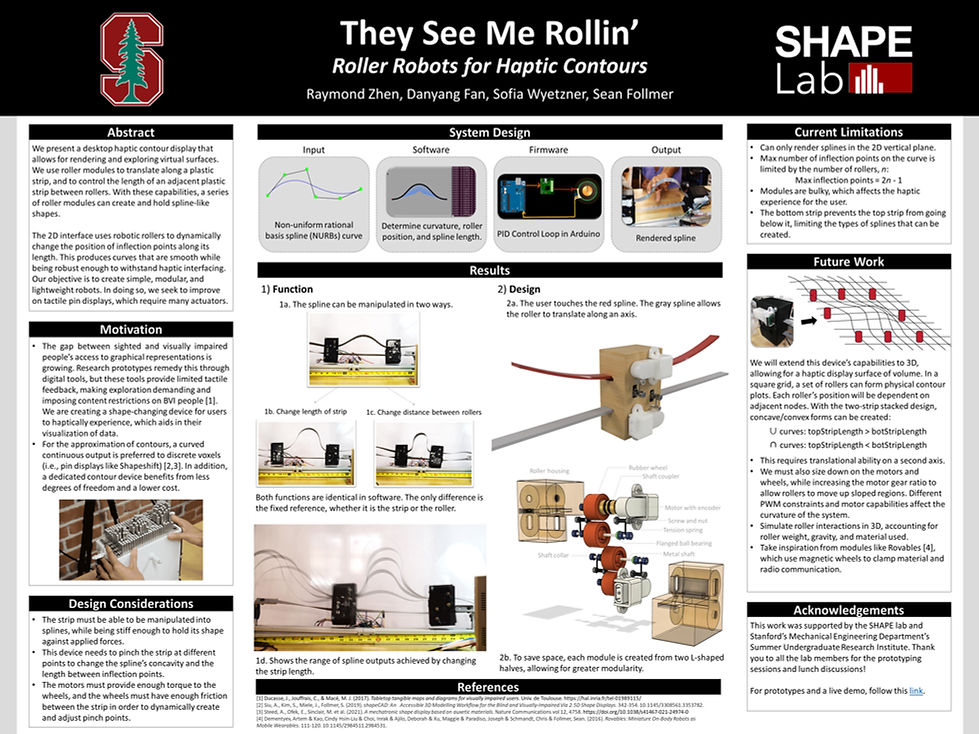

BIind and visually impaired people have limited tools for data visualization (which is needed for finances, historical trends, STEM education, etc.). Existing prototypes remedy this problem using digital tools only, but tactile feedback makes exploration more intuitive. I created a contour display that allows for the exploration of surfaces by manipulating a strip of material into spline-like shapes.

Neat things about the design:

• The 2D interface uses robotic rollers to dynamically change the position of inflection points along its length. This produces curves that are smooth while being robust enough to withstand haptic interfacing.

• The user inputs a NURBs curve, which is simulated in order to determine where each roller module should be relative to each other.

• Each roller module moves by using motors controlled in PID loop coded in Arduino.

My Research Experience

I originally wanted to create a haptic glove to help with curvature perception for blind and visually impaired people. However, the motivation for this project wasn't well researched--haptic gloves cannot convey tilt succinctly, which is essential to curvature perception. But they are effective for gauging relative heights! Nonetheless, I had to adapt and ensure that my work effectively addressed a gap in existing prototypes.

After conducting my literature review, my goal was to create a contour display that allowed for rendering and exploring virtual surfaces. This would be done by manipulating a strip of material into spline-like shapes. Such a device needed to pinch at different points to change the spline's concavity and length. A roller device accomplishes this perfectly.

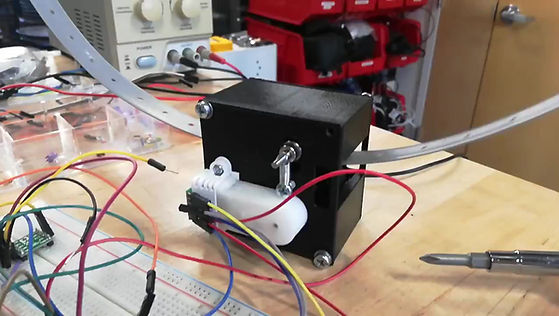

I modeled the first prototype using zip ties and electrical clips, and experimented with different materials for the strip like nylon and rubber. The rubber deform permanently when bent, but wasn't plastic enough to hold it's shape. I eventually settled on a polyethylene (plastic), which retained it's shape but twists in high energy configurations.

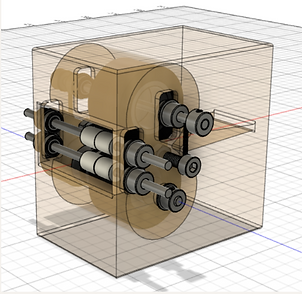

My design strategy was as follows:

-

Find the force required to keep the strip down. Multiply by a factor of safety (4).

-

Pick a wheel of a reasonable size, material, and ease of integration with potential motors.

-

Calculate torque required based on wheel radius.

-

Find motor/gearbox combo.

-

CAD and see if it fits!

A lot of considerations went into sourcing the motor, wheels, shaft collars, etc. Of important note is a tension spring, which is used to press the two wheels together and keep friction with the strip.

I tested the first design, and I was happy with the functionality. Both functions, in moving the strip and translating along the strip, involve the code. The only thing that changes is what’s stationary, whether the strip or the roller module. Here, I got to implement what I learned in my controls class and created a PID loop to accurately track where each roller is.

My second prototype got rid of the outside guide wheels entirely. I also sized down on the previous prototype by creating L-shaped housings that fit perfectly together, saving space and weight. The result was a design that I was happy out. The next prototype I'm working on will be able to produce a 3D surface.